Microsoft’s Phi-3, with 3.8 billion parameters, could match GPT-3.5. This suggests a shift towards “small language models.”

Microsoft revealed on Tuesday that it has released a new, free AI language model called Phi-3-mini. This model is smaller and cheaper to use than bigger models such as OpenAI’s GPT-4 Turbo. Because of its compact size, it can work on smartphones without needing an internet connection, providing abilities similar to the free version of ChatGPT.

In the field of AI, the size of AI language models is often assessed by counting parameters. These parameters are numbers in a neural network that shape how the model creates and processes text.

They are developed through training on big datasets, effectively transforming the model’s knowledge into numbers. Generally, having more parameters lets the model handle more detailed and complex language tasks, but it also means it needs more computing power to operate and train.

Today’s biggest language models, such as Google’s PaLM 2, contain hundreds of billions of parameters. It is said that OpenAI’s GPT-4 has more than a trillion parameters, distributed across eight models, each with 220 billion parameters, in a setup known as mixture-of-experts.

To function correctly, both models need powerful GPUs found in data centers, along with other support systems.

Microsoft targeted a smaller scale with Phi-3-mini, which has just 3.8 billion parameters and was trained using 3.3 trillion tokens. This makes it suitable for running on the consumer GPU or AI-accelerator hardware available in smartphones and laptops.

It follows two earlier smaller models from Microsoft: Phi-2, launched in December, and Phi-1, introduced in June 2023.

Phi-3-mini has a 4,000-token context window, while Microsoft has also developed a version with 128,000 tokens, named “phi-3-mini-128K.” Additionally, Microsoft plans to launch 7-billion and 14-billion parameter versions of Phi-3, which it describes as being “much more capable” than the phi-3-mini.

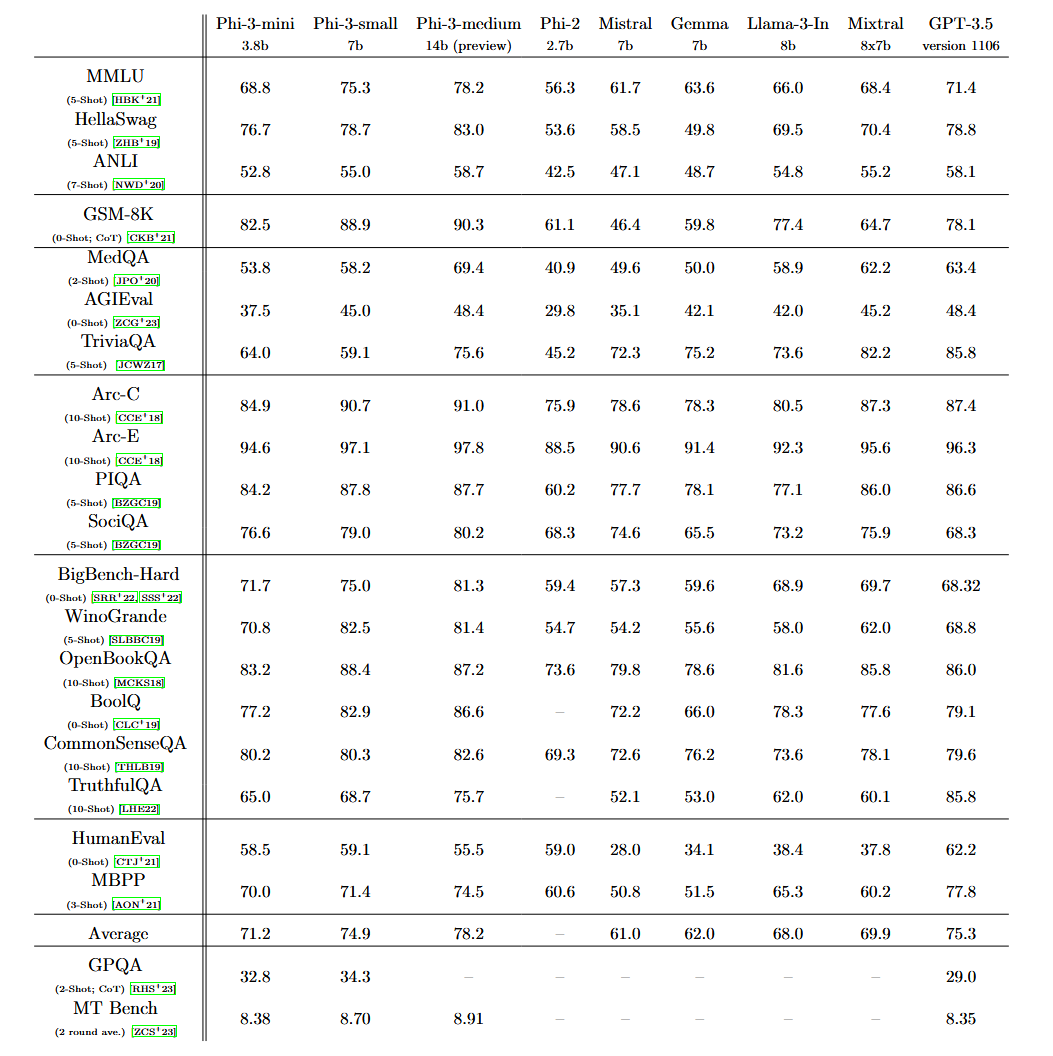

Microsoft claims that Phi-3’s performance matches that of other models like Mixtral 8x7B and GPT-3.5, according to a document called “Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone.” The Mixtral 8x7B, by French AI firm Mistral, uses a mixture-of-experts approach, and GPT-3.5 is used in the free version of ChatGPT.

“Most models that operate on local devices still require substantial hardware,” Willison notes. “However, Phi-3-mini needs less than 8GB of RAM and can generate tokens quickly even on a standard CPU. It’s available under an MIT license and performs well on a $55 Raspberry Pi, producing results that rival those from models four times its size.”

How was Microsoft able to achieve similar capabilities to GPT-3.5, which boasts at least 175 billion parameters, in such a small model? The researchers discovered the solution by selecting high-quality, targeted training data initially sourced from textbooks.

“The innovation lies entirely in our dataset for training, a scaled-up version of the one used for phi-2, composed of heavily filtered web data and synthetic data,” writes Microsoft. “The model is also further aligned for robustness, safety, and chat format.”

A lot has been discussed about the environmental impact of AI models and data centers, as highlighted on Ars. With advancements in research and new methods, it’s feasible that experts in machine learning might enhance the efficiency of smaller AI models, potentially reducing the need for larger ones for daily tasks.

This could not only save costs over time but also use much less energy overall, significantly reducing the environmental impact of AI. Models like Phi-3 could be a move in this direction if their performance is verified.

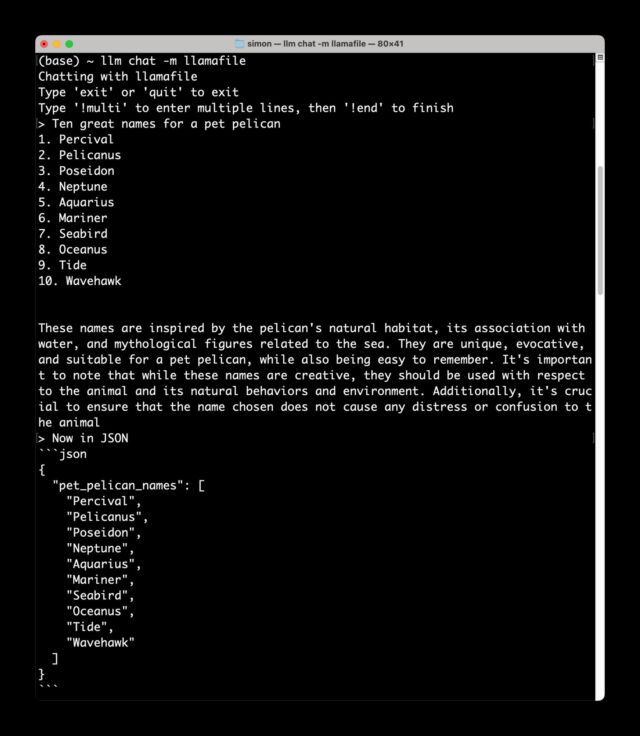

Phi-3 is readily accessible on Microsoft’s Azure cloud service, and is also available through collaborations with the machine learning model platform Hugging Face and Ollama, a framework enabling local operation of models on Macs and PCs.

What we think?

I think Phi-3-mini will change how we use AI daily. It’s small but strong, like GPT-3.5, and works on phones and laptops.

This means more people can use AI anywhere without big costs or needing strong internet.

If Phi-3-mini really does as promised, it could make big AI models less needed, saving money and energy. It’s exciting to see AI become more accessible and eco-friendly.